In the context of DevOps, a deployment strategy is the process or methodology used to introduce new features or software upgrades into production settings with the least amount of downtime and highest level of efficiency. Deployment techniques play a crucial role in DevOps processes by automating and streamlining the process of distributing updates to end users. In order to create dependable, scalable, and continuous deployment pipelines, these strategies cover a wide range of methods, instruments, and best practices. In DevOps, some typical deployment tactics are as follows:

Manual Deployment Strategy

Software updates can be released simply with manual deployment, which involves human participation at every stage. Developers and operations teams use this approach to deploy changes to the production environment by manually transferring files, running scripts, and carrying out other essential tasks. Although straightforward in theory, manual deployment can be time-consuming and error-prone, particularly when the application's complexity or update frequency rise. Ensuring that all procedures are done accurately and in the correct order necessitates painstaking attention to detail and precise coordination. For large-scale or quickly changing systems, manual deployment is less appropriate since it lacks the automation and consistency provided by alternative deployment methodologies. Even yet, there are situations when the danger of automation failures outweighs the benefits of automation, or applications with stringent regulatory requirements where human oversight is required that make manual deployment the better choice, despite its drawbacks. But, in order to increase productivity, dependability, and agility, more automated techniques like continuous integration and continuous deployment (CI/CD) frequently augment or replace manual deployment in the majority of contemporary software development workflows.

Continuous Deployment Strategy

Code updates are automatically sent to production environments using continuous deployment (CD), a software development technique, as soon as they are available, usually following the completion of automated tests. Through quicker feedback loops and a shorter time-to-market, this method helps developers to quickly and continually provide changes to customers. In order to automate the build, test, and deployment procedures, Continuous Deployment integrates CI/CD pipelines. The deployment method is made smooth and effective by these pipelines, which are activated automatically each time changes are sent to the version control system. Teams may concentrate on developing and enhancing features rather than handling deployments when they use Continuous Deployment, which automates the deployment process to minimize manual involvement and lower the possibility of human mistake.

Because frequent releases and quick iterations are necessary to maintain competitiveness in ever-changing markets, continuous deployment works especially effectively in agile development settings. To assure the stability and dependability of deployments, however, a strong level of trust in automated testing and monitoring systems is necessary. Consistent deployment, in general, encourages creativity and agility in software development by enabling teams to provide value to users promptly and consistently.

Version control, automated testing, and deployment automation are the main elements of a continuous deployment pipeline. Developers maintain the source code and keep track of their modifications using version control systems like Git. Jenkins or Travis CI are examples of continuous integration servers that keep an eye on the version control system for any new code changes and automatically start the build and test procedures when they do. In order to make sure that code changes adhere to quality standards and do not cause regressions, automated tests, such as unit tests, integration tests, and end-to-end tests, are run as part of the continuous integration process. Last but not least, tested code changes are automatically deployed to production environments using deployment automation technologies like Docker, Kubernetes, or Ansible.

Blue-Green Deployment Strategy

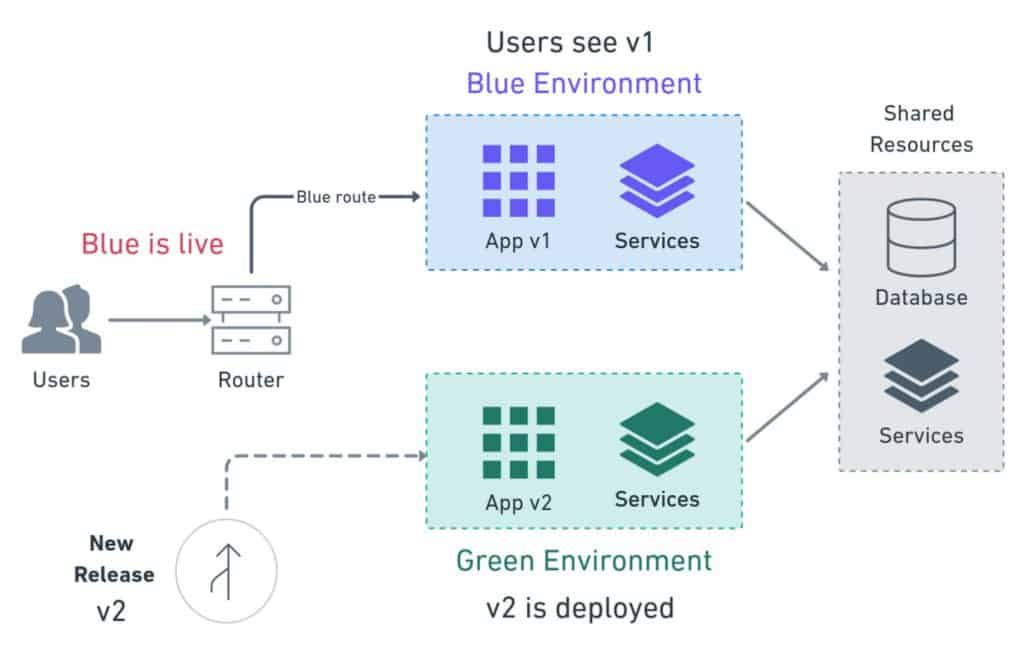

Organizations may deliver software application upgrades with the least amount of risk and disruption by using the blue-green deployment deployment approach. Using this method, two production environments are kept in perfect condition: an inactive environment called "green" and an active environment called "blue". Through the utilization of this dual-environment configuration, enterprises may effortlessly transition between the active and inactive environments, enabling flawless deployment and rollback procedures.

When the blue environment is used as the primary production environment to handle user traffic and requests, this is how the blue-green deployment process usually starts. Acting as a mirror image of the blue environment, the green environment is dormant in the meanwhile, receiving no traffic from users. The changes are first applied to the green environment separately from the production environment when it comes time to deploy a new version of the application.

In-depth testing and validation are carried out to make sure the updated application performs as anticipated and satisfies performance and reliability requirements before traffic is moved to the green environment. To find any possible problems or flaws that could have been introduced during the deployment process, this testing stage is essential. Before the new version goes online, it is possible to validate it using a variety of testing methods, such as automated tests, manual tests, and user acceptability testing.

The traffic switch is the next stage of the deployment procedure that occurs after the new version has been extensively tested and verified in a green environment. In this stage, user traffic is routed from the blue environment to the green environment by updating the routing configuration. To provide a smooth transition for end users, this shift is usually accomplished via load balancer setups or network-level routing adjustments.

Conclusively, blue-green deployment is an effective deployment approach that enables companies to roll out software program changes with the least amount of downtime, lowest possible risk, and maximum dependability. Organizations may guarantee continuous availability and uptime for their applications, as well as facilitate quick rollback and risk mitigation, by keeping two identical production environments and smoothly transitioning between them. Blue-green deployment has emerged as a key component of contemporary software delivery methodologies, allowing enterprises to provide value to their consumers more successfully and economically. It does this by supporting automation, infrastructure as code, and progressive rollout.

Canary Deployment Strategy

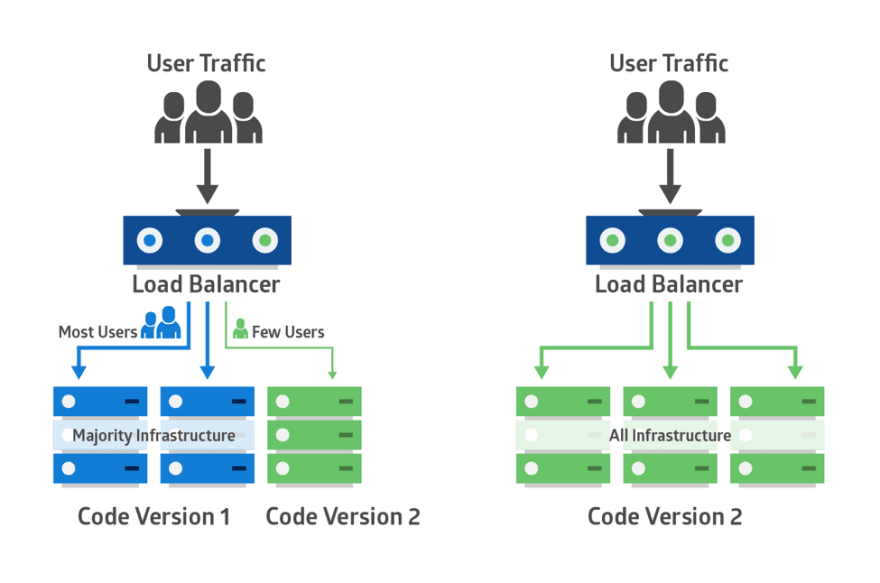

In order to reduce the risks connected with new releases or upgrades, software development and release management employ the sophisticated deployment technique known as canary deployment. This methodology, which gets its name from the canary employed in coal mines to identify harmful gasses, entails introducing modifications one small group of users or servers at a time before making them available to the full user population or production setting. The fundamental function of canary deployment is to serve as an early warning system for developers, enabling them to identify and resolve possible problems before they have an effect on a larger audience.

Canary deployment is based on the fundamental idea of reducing risk through carefully monitored experimentation. Rather than releasing the updated version of the program to every user at once, developers deliver it to a small group of users—often referred to as the "canary group" or "canary cohort." Because they usually make up a tiny portion of all users, a measured and slow deployment is possible. Developers are able to assess the effects of the modifications and decide whether to move forward with the entire deployment by keeping an eye on the performance, stability, and user input from this initial group.

Careful planning and cooperation across several software development lifecycle phases are necessary for the successful implementation of canary deployment. First, a deployment pipeline that is designed especially for canary releases is created. This pipeline consists of components for implementing changes to the canary group, tracking key performance indicators (KPIs), gathering user input, and, in the event of a problem, rolling back modifications. To ensure consistency and dependability throughout the deployment, automation is essential in optimizing these operations.

As a result, developers may release updates with confidence and reduce the chance of interruptions thanks to the potent software release management strategy provided by canary deployment. Updates may be sent out gradually to a limited group of users or servers so developers can evaluate the effects of changes in a safe environment before deciding when to move forward with the full deployment. Successful canary deployment ensures the dependability and robustness of software programs in today's fast-paced digital ecosystem by including automation, monitoring, user feedback, and rollback processes.

Rolling Deployment Strategy

Rolling deployment is a deployment approach that ensures high availability and dependability while minimizing user interruption by updating software programs gradually and under supervision. With the help of this technique, users may continue to use the program while it is being deployed, either one instance at a time or in small batches. Large-scale applications requiring careful management of the risk of service disruption and minimal downtime due to many instances operating in a distributed environment are especially well-suited for rolling deployment.

Rolling deployment refers to the practice of upgrading application instances gradually as opposed to all at once. By reducing the blast radius of any possible problems, this progressive approach helps reduce the chance of deploying flawed or incompatible modifications. With rolling deployment, changes may be made gradually, putting only a portion of the program offline at any one moment, as opposed to shutting down the complete application all at once. Because traffic is immediately redirected to the unaffected instances, this guarantees that users can still access the application during the deployment process.

The first step in rolling deployment is usually to start the deployment on a limited group of instances, which is also known as a "rolling window." One by one or in small groups, these instances are pulled down, leaving the remaining instances to handle user traffic. All necessary customizations and database migrations are carried out, and the updated version of the application is deployed to an offline instance. When the deployment is finished, the instance is restarted and goes through health checks to make sure everything is working properly before it is included back into the application pool.

Rolling deployment, in summary, is a deployment technique that strikes a compromise between availability, flexibility, and dependability, which makes it ideal for contemporary distributed systems. Rolling deployment reduces the risk of service disruption and allows enterprises to roll out changes fast and effectively by upgrading instances progressively and ensuring continuous service availability. Delivering high-quality software with the least amount of downtime and maximum agility is something that rolling deployment can help enterprises do with the correct tools and policies in place.

Recreate Deployment or Basic Deployment Strategy

The basic deployment method known as "basic deployment" updates every server or node for a certain web application at the same time. In a basic deployment, customers may enjoy the newly added functionality by effectively taking the server offline, recreating it, and hosting it again. A web application with simple deployment will have significant downtime when a new version or update is deployed, which may affect network users. The most dangerous deployment approach is the deployment method's inability to be quickly rolled back, which is another drawback.

It would be impossible to predict how people would react to the updated version if there are bugs or faults in the new version, therefore it would have to be pulled down again, lengthening the downtime, and then fixed or reorganized to the prior version. Basic deployment, on the other hand, is less expensive than the blue-green deployment approach and is appropriate for companies that have extended off-peak hours where downtime wouldn't be harmful.

Multi-Service Deployment or Microservices Deployment Strategy

Microservices deployment, often referred to as multi-service deployment, is the process of implementing sophisticated applications made up of several separate services that cooperate to provide the application's overall functionality. Because each service is created, implemented, and maintained separately, there is more scalability, fault isolation, and agility. When using a multi-service deployment, each service usually has its own database, dependencies, and codebase. It also uses well-defined APIs to interface with other services.

Each service may be expanded and modified individually during deployment, allowing for quick iteration and the release of new features. Multi-service deployments frequently employ orchestration tools like Kubernetes and containerization technologies like Docker to bundle and manage services uniformly across many environments.

When it comes to multi-service architectures, deployment techniques like rolling, canary, and blue-green deployment can be used to guarantee smooth updates with the least amount of user interference. The stability and scalability of multi-service deployments may also be increased by utilizing service mesh technologies like as Istio or Linkerd to manage traffic, handle service-to-service communication, and provide observability.

Although multi-service deployment has several advantages overall, such as increased fault tolerance, scalability, and agility, it also makes managing inter-service communication, versioning, and deployment orchestration more difficult. Successful multi-service deployment at scale requires best practices, automation, and effective tooling.

Shadow Deployment Strategy

Software developers may test upgrades or new features alongside live production systems by using a technique called "shadow deployment," which keeps end users unaffected. In short, it's the process of introducing a new version of an application or service into a parallel environment so that it may function alongside the existing one, out of the users' view. Developers and testers may see how the new version performs in real-world scenarios without running the risk of interfering with the primary production system by allocating a fraction of the traffic or workload to this shadow environment. This strategy reduces the possibility of faults or performance problems and ensures a smoother transition by enabling extensive testing and validation of the new features or updates prior to their complete rollout to all users.

And once the new version has been thoroughly tested and deemed stable, it can be promoted to replace the existing production environment, completing the deployment process seamlessly.