The Docker Swarm and the Kubernetes are two important container orchestration platforms or the tools that facilitates the management of containerized applications, results in enhancing the scalability, resilience, and automation in modern software development and deployment processes.

The Docker Swarm, developed by Docker, Inc., is a native clustering and orchestration tool for Docker containers. It enables users to deploy, manage, and scale containerized applications across a cluster of Docker hosts. Docker Swarm follows a more simple approach as compared to the Kubernetes, which makes it more suitable for small-scale applications deployments.

On the other hand, the Kubernetes was developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), it is a powerful open-source platform for automating the deployment, scaling, and management processes of containerized applications. Kubernetes performance has the more robust features and a great ecosystem, making it the great choice for the large-scale, complex containerized environments.

What is Docker Swarm?

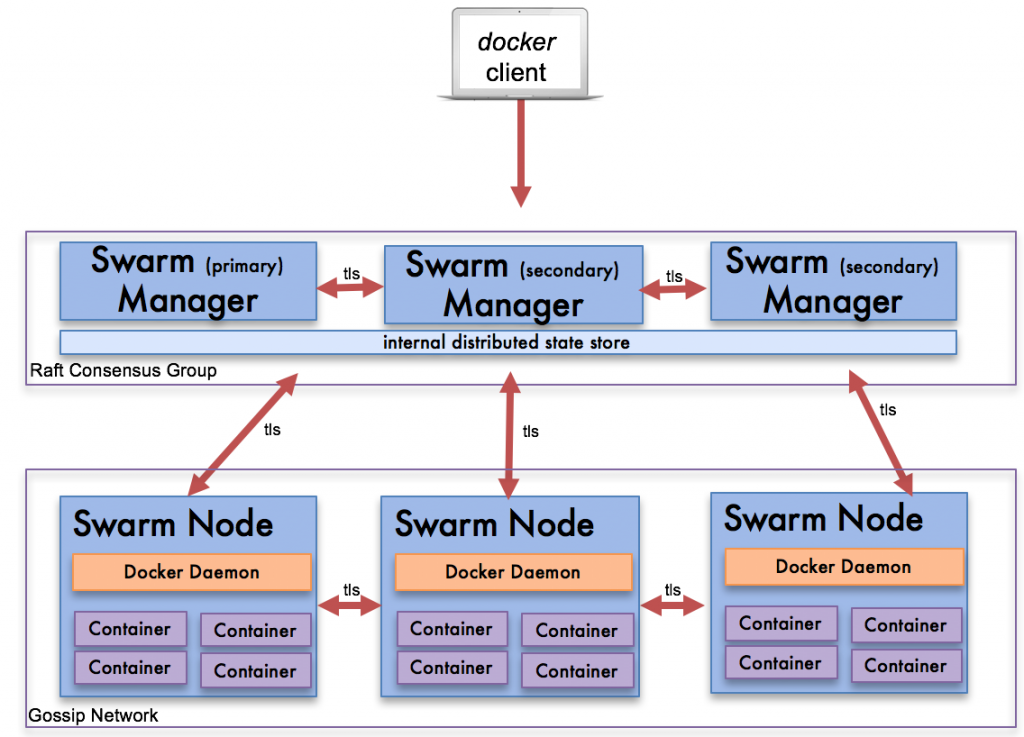

The Docker Swarm is a native clustering and container orchestration tool for Docker containers. It allows the users to create and manage a cluster of the Docker nodes, this will simply leads to the enabling of increased scalability, higher availability, and the very smooth deployment of containerized applications. The Docker Swarm simplifies the process of managing a large number of docker containers that spreads across the multiple hosts, by providing a direct way to interact with the cluster of docker nodes.

In other words we can say that the Docker Swarm enables the creation of a pool of Docker hosts that act as a single virtual system, by simplifying the management and scaling of containerized docker applications across multiple machines or hosts. With the help of Docker Swarm we can omit away the various complexities of the distributed systems and provide a straightforward or the direct interface for deploying docker containers and managing the docker containers.

In Docker Swarm, the omission can be achieved through the various concepts of services, which define the desired state of an application that means with the help of the concept of defining services we can get our application's desired state, by including the various number of replicas, docker container's images, network configurations, and various other parameters of an application.

The Docker Swarm continuously monitors the cluster to ensure that the actual state of the application actually matches the desired state of the application as defined by the services, by automatically scaling the docker containers up and down as per the needs to maintain the desired level of availability and performance of the application.

Beside all this the Docker Swarm power-up the docker container technology as Docker itself, making it seamlessly and efficiently integrated with existing Docker workflows and tools. This means that the user or the developers can use familiar Docker commands and Dockerfiles to build, ship, and run the applications on a Swarm cluster without having to learn new paradigms or tools for the application deployment processes. Another very important aspect of Docker Swarm is that its built-in support for higher availability and fault tolerance. By default, the Docker Swarm distributes the docker containers across the multiple nodes in the cluster, by ensuring that even if one or more nodes fail, the application remains accessible and operational.

Features of Docker Swarm:

Scalability: The Docker Swarm allows user or a developer to scale your application horizontally by adding or removing nodes from the cluster. Basically, the Docker Swarm allows user or a developer to scale your applications up and down by simply adding or removing nodes easily from the cluster.

Service Discovery: The Docker Swarm provides built-in docker swarm's service discovery, by allowing the docker containers within the swarm to discover and communicate with each other by only using each other's service names. The Docker Swarm helps the user or a developer in automatically discovering services within the cluster, which simply makes the communication seamless or uninterrupted.

Rolling Updates and Rollbacks: The Docker Swarm supports the rolling of service's updates, by enabling the seamless or the uninterrupted deployment of new versions of services without having the downtime. With the help of this feature of docker swarm it simply replaces the old docker containers with the new docker containers.

Load Balancing: The Docker Swarm automatically distributes the incoming traffic across the different docker containers to improve the overall performance. The Docker Swarm includes a integrated load balancer that distributes the incoming requests across the docker containers running the same service.

High Availability: The Docker Swarm ensures that the application is always up and running by providing various failover capabilities.

Multi-Environment Support: The Docker Swarm supports deploying applications across different environments, including development, testing, and production environments. The Docker Swarm allows a user or a developer to define the each environment's specific configurations and easily deploy applications across multiple Docker Swarm clusters.

Docker Stack Integration: The Docker Swarm easily integrates with Docker Stack, by allowing a user or a developer to define and deploy multi-service docker applications by using a single YAML file. The Docker Stack simplifies the management of various complex application stacks, and enabling efficient collaboration and automation.

Architecture of Docker Swarm:

What is Kubernetes?

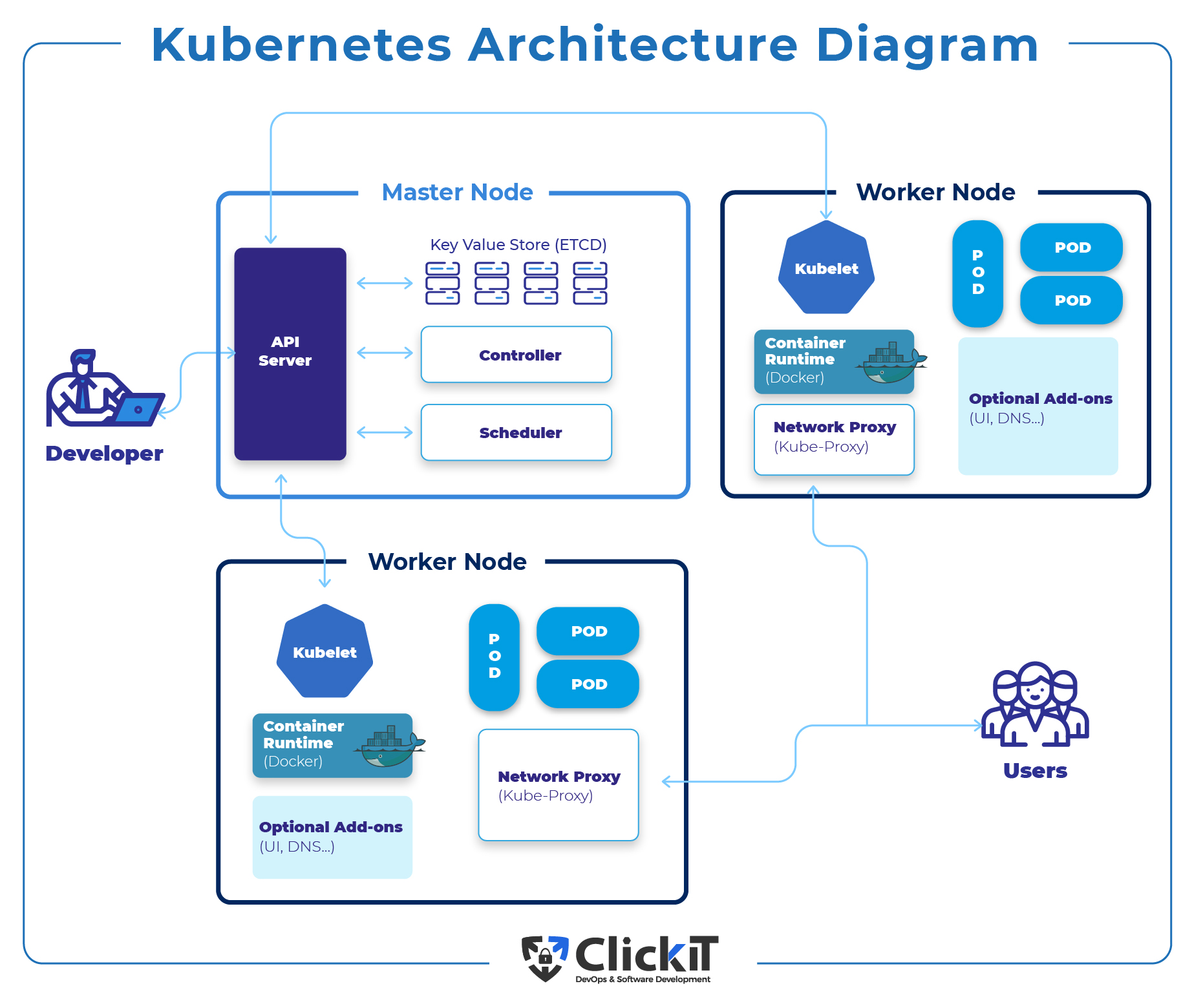

The Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF). It provides the great way for applications that are to be deployed, managed, and scaled in a computing environment. The, kubernetes automates the deployment, scaling, and management processes of the containerized applications, that provides a powerful infrastructure for building, deploying, and managing scalable, resilient, and portable applications in the cloud-native environments. The Kubernetes is a kind of a group of machines and expose them as if it was a single thing. It is similar to running an application locally in which you don’t care which CPU core is executing it. We just run the application, and let the operating system take care of the rest. Kubernetes does this at the data center level. It does not matter if we have one or one thousand machines available. The way we interact with Kubernetes is the same we tell it what we want to do, and it will do its best to make that happen.

Kubernetes API allows us to declare what we want without having to know exactly how that is going to happen. The Kubernetes ability to remove the current ongoing infrastructure complexities, by allowing developers to focus on building and deploying applications without worrying about the complexities of the underlying infrastructure.

The Kubernetes achieves the tasks using a declarative script, where the users or the developers specify the desired state of their applications in a YAML or JSON manifests files, and then Kubernetes take care of ensuring that the actual state matches the desired state, by constantly do monitoring. The Kubernetes operates in a cluster-based architecture, where a cluster consists of a collection of nodes. And each node can be a physical machine or a virtual machine.

The architecture of Kubernetes is based on a master-slave architecture model, where the master node controls and manages the cluster, while the worker nodes executes the actual workloads. The master node contains of several components, such as the Kubernetes API server, a scheduler, a controller manager, and a etcd. The API server serves as the primary interface for interacting with the Kubernetes cluster, by allowing the users or the developer to create, modify, and delete the resources. And the scheduler is responsible for placing workloads upon the nodes based on resource availability and scheduling constraints. And the controller manager manages various controllers that smoothens the state of the Kubernetes cluster, ensuring that the actual state matches the desired state. And Etcd is a distributed key-value store that serves as Kubernetes primary data store, for storing the configuration data and the cluster's current state.

Features of Kubernetes:

Self-Healing: The Kubernetes continuously monitors the health of applications and automatically restarts or replaces containers that fail.

Rolling Updates and Rollbacks: The Kubernetes supports the rolling of service's updates, by enabling the seamless or the uninterrupted deployment of new versions of services without having the downtime. With the help of this feature of docker swarm it simply replaces the old docker containers with the new docker containers.

Container Orchestration: The Kubernetes automates the deployment, scaling, and management of containerized applications, by allowing the users to define the desired states.

Automatic Scaling: Kubernetes provides built-in support for automatic scaling of applications based on CPU or memory utilization, ensuring that applications can dynamically adapt to changing workloads.

Load Balancing: The Kubernetes automatically distributes the incoming traffic across the different docker containers to improve the overall performance. The Docker Swarm includes a integrated load balancer that distributes the incoming requests across the docker containers running the same service.

Monitoring and Logging: The Kubernetes integrates with various monitoring and logging solutions, allowing users to monitor the health and performance of applications.

Storage Orchestration: Kubernetes provides storage orchestration capabilities, allowing users to dynamically provision and mount storage volumes to containers.

Configuration Management: Kubernetes supports configuration management through ConfigMaps and Secrets, enabling users to split-up the configuration from application's source code and manage the sensitive information in a more secure way.

Batch Execution: The Kubernetes supports the batch execution and job management, by allowing users to run batch jobs/tasks or scheduled jobs/tasks efficiently within the Kubernetes Cluster.

Higher Security: The Kubernetes provides a range of security features, including role-based access control (RBAC), network policies, pod security policies, and encryption, by enabling the users to secure their containerized applications and sensitive data.

Community: The Kubernetes has a very big and large community of contributors, vendors, and users, which provides the extensive documentation, tutorials, and supporting resources, as well as a very rich ecosystem of third-party tools and various other integrations.

Architecture of Kubernetes:

Key differences between Docker Swarm and Kubernetes

| Docker Swarm | Kubernetes |

| Docker Swarm is a Native orchestration tool for Docker containers | Kubernetes is a Comprehensive container orchestration platform |

| Limited scaling capabilities | Advanced scaling capabilities |

| Simple architecture with a very few components | It has a more complex architecture with multiple components |

| Very limited self-healing capabilities | Advanced self-healing capability for high availability |

| Basic support for rolling updates | Very large support for rolling updates and rollbacks |

| Smaller community compared to Kubernetes | Large and active community with a great support |

| Easy learning curve for Docker users | Steep learning curve due to complexity and richness |

| Suitable for smaller-scale deployments | Ideal for large-scale, complex deployments and cloud-native environments. |

Some other basic Comparisons between the Docker Swarm and Kubernetes

| Point of comparison | Kubernetes | Docker Swarm |

| Installation | Complex | Simple |

| GUI | Detailed view | No GUI, needs third party |

| Availability features | multiple | minimum |

| Scalability | All-in-one scaling based on the current traffic | Values scaling quickly approximately 5 times faster than K8s over scaling automatically |

| Horizontal auto-scaling | Yes | No |

| Monitoring capabilities | Yes, built-in | No, needs third party |

| Security features | Supports multiple security features | Supports multiple security features |

| CLI (Command Line Interface) | Needs a separate CLI | CLI is out of the box |

Overall, we can say that the Docker Swarm can be preferred for its simplicity and tight integration with Docker, where as Kubernetes has a complex, enterprise-grade environments where scalability, resilience, and various extensive features sets are required. Organizations often choose between Docker Swarm and Kubernetes based on their specific requirements, existing infrastructure, and level of expertise. Some may opt for Docker Swarm for smaller projects, while others may use Kubernetes for its advanced capabilities and wide industry adoption, especially in cloud-native environments. Although, both the platforms play the crucial roles in modern container orchestration, by power-up the Developers, DevOps Engineers and DevOps teams to build, deploy, and manage containerized applications efficiently and effectively.